Science

Transforming Research: Pairing NotebookLM with Local LLM Boosts Productivity

In a significant advancement for digital research workflows, users are finding that integrating NotebookLM with local Large Language Models (LLMs) can lead to extraordinary productivity gains. This hybrid approach combines the contextual accuracy of NotebookLM with the speed and privacy offered by local LLMs, creating a more efficient and controlled research environment.

Enhancing Research Workflows

For many professionals engaged in complex projects, traditional research methods can be cumbersome. NotebookLM serves as a valuable tool for organizing research and generating insights based on user-uploaded materials. However, it can lack the immediacy and flexibility of a local LLM. By experimenting with a connection between these two resources, users are discovering a more streamlined research process.

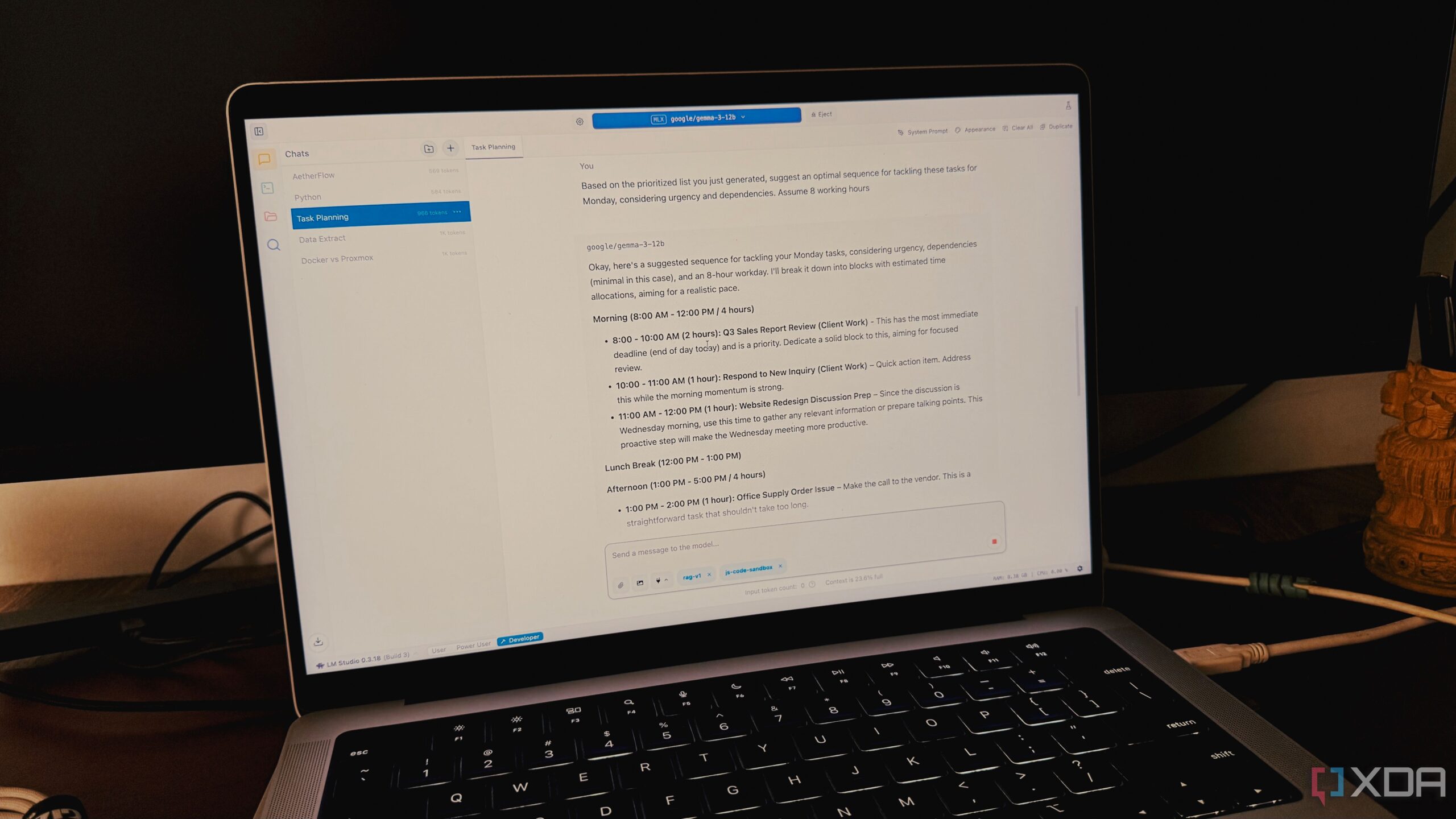

The integration begins with the local LLM operating in LM Studio, which allows users to leverage the capabilities of a powerful model, such as the 20B variant of OpenAI. This setup enables users to quickly gather an overview of complex topics, such as self-hosting applications via Docker. The local LLM can generate structured overviews in seconds, laying the groundwork for more detailed explorations.

Achieving Greater Efficiency

Once the structured overview is created, users can easily transfer this information into NotebookLM, where it is treated as a source for further inquiries. This method allows for a more accurate and context-rich understanding of the subject matter. For example, users can ask specific questions about essential components required for self-hosting applications and receive swift, relevant responses.

Additionally, the integration of both tools facilitates audio overview generation, transforming the research into a personalized podcast format. This feature allows users to absorb information while multitasking, greatly enhancing productivity.

Another significant advantage is the source-checking and citation capabilities within NotebookLM. As users compile information, the interface provides instant references for each fact, saving considerable time previously spent on manual verification. This ensures accuracy without the frustration of cross-referencing multiple documents.

For those looking to maximize productivity while maintaining control over their data, this combination of local LLMs and NotebookLM represents a modern blueprint for effective research. Users are encouraged to explore how this integration can reshape their approach to complex projects and improve overall efficiency in their workflows.

The possibilities for enhancing productivity through this hybrid method are vast and continue to evolve. As more professionals adopt this strategy, the impact on research methodologies may become increasingly profound, paving the way for future innovations in the digital research landscape.

-

Lifestyle4 months ago

Lifestyle4 months agoLibraries Challenge Rising E-Book Costs Amid Growing Demand

-

Sports3 months ago

Sports3 months agoTyreek Hill Responds to Tua Tagovailoa’s Comments on Team Dynamics

-

Sports3 months ago

Sports3 months agoLiverpool Secures Agreement to Sign Young Striker Will Wright

-

Lifestyle3 months ago

Lifestyle3 months agoSave Your Split Tomatoes: Expert Tips for Gardeners

-

Lifestyle3 months ago

Lifestyle3 months agoPrincess Beatrice’s Daughter Athena Joins Siblings at London Parade

-

World3 months ago

World3 months agoWinter Storms Lash New South Wales with Snow, Flood Risks

-

Science4 months ago

Science4 months agoTrump Administration Moves to Repeal Key Climate Regulation

-

Science3 months ago

Science3 months agoSan Francisco Hosts Unique Contest to Identify “Performative Males”

-

Business4 months ago

Business4 months agoSoFi Technologies Shares Slip 2% Following Insider Stock Sale

-

Science4 months ago

Science4 months agoNew Tool Reveals Link Between Horse Coat Condition and Parasites

-

Sports4 months ago

Sports4 months agoElon Musk Sculpture Travels From Utah to Yosemite National Park

-

Science4 months ago

Science4 months agoNew Study Confirms Humans Transported Stonehenge Bluestones